Generative AI Is Working Its Way Into Your Business – Are You Ready?

Generative AI is capturing the public imagination in a way few technical breakthroughs have since Thomas Edison delighted audiences with the earliest motion pictures over a century ago. Armed with little more than a keyboard, today anyone can conjure up a dazzling array of media – from artistic imagery to code to nuanced articles on a wide array of subjects – in a few seconds. With text as a universal interface, the possibilities seem endless.

As with any disruptive technology, many executives are wondering what this means for their business – particularly in a turbulent economic environment where growing productivity takes on elevated importance. Here are a few tips for developing a generative AI strategy (clue: it starts with acknowledging that some of your employees are already using these tools, even without knowing it).

What Is Generative AI?

Generative AI generally refers to an ML model and application that produces content from text prompts. Under the hood, generative tech is typically powered by sophisticated transformer models that contain billions of parameters and are trained on a massive corpora of data. Examples of publicly-available and open-source generative AI applications include text-to-image generators like Open AI’s Dall-E 2 and Stability AI’s Stable Diffusion 2 as well as large language model (LLM)-powered applications like GitHub Copilot – which turns text prompts into code and functions – and ChatGPT, which is billed as a chatbot but displays a wider variety of skills.

What Makes Generative AI Distinct From Prior Digital Revolutions?

Compared to previous waves of digital transformation that played out over decades, generative AI is evolving before our eyes. Since developers can use pre-trained models as a starting point rather than having to train a new model from scratch – a concept known as transfer learning – the pace of innovation is unlikely to slow down anytime soon. Indeed, GPT-4 is widely rumored to launch early next year.

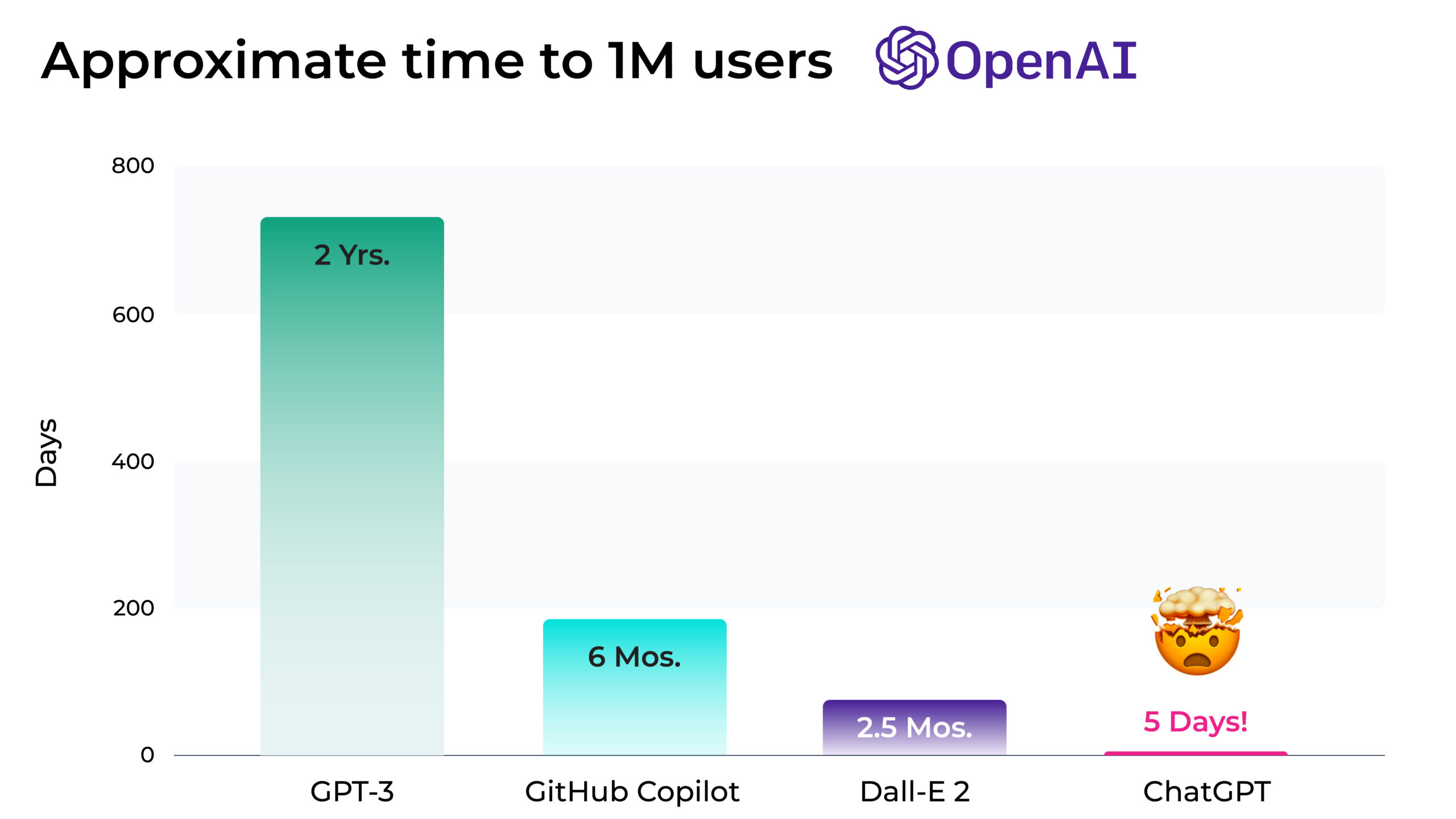

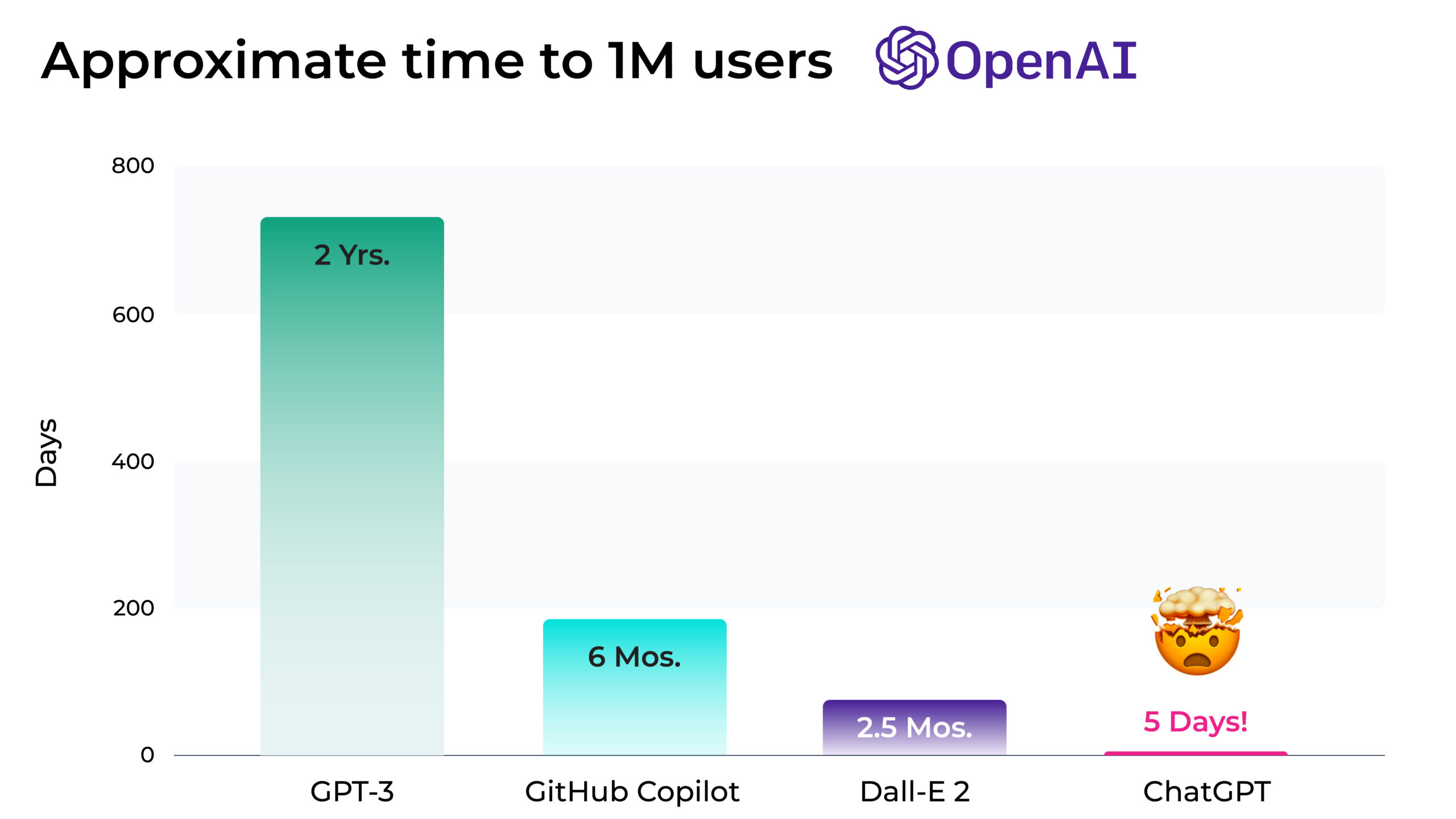

Adoption of generative AI is also accelerating. GitHub Copilot, for example, eclipsed one million users in its first six months and is credited with increasing developer productivity by 1.5 times. Marketers and their agencies are similarly embracing generative tech to do things like develop marketing content or ad creative to reduce overhead and boost creativity.

The technical depth of these breakthroughs is also worth highlighting. Generative AI is far more complex and difficult to design, implement, and maintain than software. Since the premiere of the first-ever transformer model, developed by Google Brain, dozens of companies have built even more powerful models. OpenAI’s ChatGPT, for example, adds reinforcement learning for human feedback as its not-so-secret sauce in making its model responses align with what captivates and delights human users.

What Should You Keep In Mind When Adding Generative AI To Your Business?

Plan For Outages

Any team with generative AI in production systems should warn DevOps to be ready for outages. Why might this persist as a problem? Transformer models use “a massive amount of computing, and the output is models that would not fit on commodity hardware,” noted Jeff Boudier, Product Director at Hugging Face, at a conference earlier this year. “It’s a challenge for enterprises today to take those models…and deploy them very efficiently.” Peter Welinder, VP of Product & Partnerships at OpenAI, concurs: “It’s quite complex to get these models to run fast at scale, and that’s where we focus — making sure it’s reliable and fast and cost effective to make it easier for people.”

Proactively Identify and Mitigate Bias

Harmful biases lurk in prominent text-to-image and large language models. Effective mitigation of model bias starts with an awareness of how models work and the types of biases that exist and might emerge in production. These include biases in the data such as representation bias (i.e. historical biases in datasets that reflect inequities) and measurement bias (i.e. proxies or sample size disparities) as well as biases in the model itself like evaluation bias (i.e. using a benchmark for model evaluation that is not representative of the general population).

There are a few options to proactively safeguard against these biases. OpenAI, for example, offers a moderation endpoint to “check whether content complies with OpenAI’s content policy” that developers can use to identify and filter out harmful content on the serving layer. Teams can also provide another layer of protection upstream by simply avoiding certain topics or datasets where biases are known to cause models to behave in discriminatory ways. While these approaches are unlikely to catch everything, they are a good starting point in a rapidly-evolving space.

Do Not Trust; Verify

In The Beginning of Infinity I invented a story to illustrate that even logically consistent fiction can be a bad explanation. I asked ChatGPT about it: pic.twitter.com/ffD8mGZga4

— David Deutsch (@DavidDeutschOxf) December 12, 2022

For large language models, there continue to be known issues around robustness and truthfulness. As OpenAI CEO Sam Altman himself notes on Twitter, “ChatGPT is incredibly limited, but good enough at some things to create a misleading impression of greatness. It’s a mistake to be relying on it for anything important right now.” As always, human review is important. Given the nature of early applications of generative AI – such as generating code or content for human review – this is likely already happening.

Ensure Proper Governance

Most enterprises have robust policies, processes, and systems in place to ensure ethical practices, manage user permissions around data, and manage risks to the business from AI and data management. Generative AI should be no different.

What Will It Take For Generative AI To Reach Maturity?

Here are two areas that will likely be critical for future growth for generative AI.

Legal Certainty

Last month, a lawsuit was filed against OpenAI and Microsoft’s GitHub that could set new legal precedents in the U.S. around fair use and copyright infringement in the context of training data. How this and global regulations on AI play out in the coming years will be key in lifting the legal cloud that currently hangs over the space.

Dedicated Infrastructure & Ecosystem

Generative AI lacks its own ecosystem of tools for building, debugging, and troubleshooting. While there are a variety of existing DevOps and MLOps tools that can help, most are not built to specifically handle unstructured data – let alone black box generative AI models outputting unstructured images or text. Monitoring and understanding large language models at scale with useful tools that give actionable insights is a priority for Arize in the coming year.

Conclusion

Ultimately, it’s still early days in the generative AI hype cycle. In the coming years, enterprises should pay close attention to new developments and consider how they can use it to improve their operations and gain a competitive advantage.